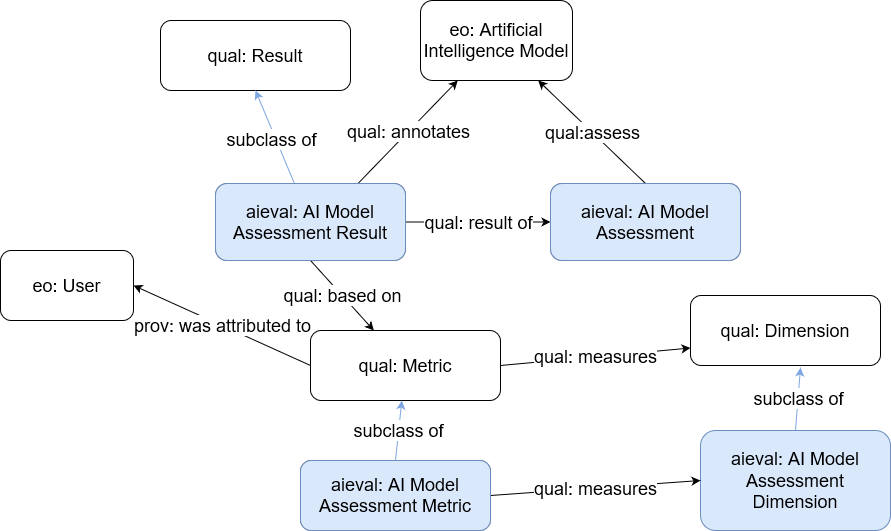

This section provides details for each class and property defined by The AI Method Evaluation Ontology.

Named Individuals

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Adjusted_Rand_Index

- Source

- https://en.wikipedia.org/wiki/Rand_index#Adjusted_Rand_index

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#AU-ROC

- Source

- https://en.wikipedia.org/wiki/Receiver_operating_characteristic

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#BLEU

- Source

- https://en.wikipedia.org/wiki/BLEU

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Brier_Score

- Source

- https://en.wikipedia.org/wiki/Brier_score

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Calinski-Harabasz_Index

- Source

- https://scikit-learn.org/stable/modules/clustering.html#calinski-harabasz-index

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Cohens_Kappa_Coefficient

- Source

- https://en.wikipedia.org/wiki/Cohen%27s_kappa

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Davies-Bouldin_Index

- Source

- https://en.wikipedia.org/wiki/Davies%E2%80%93Bouldin_index

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Dice_Index

- Source

- https://en.wikipedia.org/wiki/S%C3%B8rensen%E2%80%93Dice_coefficient

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Diversity

- Source

- https://en.wikipedia.org/wiki/Recommender_system#Performance_measures

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Dunn_Index

- Source

- https://en.wikipedia.org/wiki/Dunn_index

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#F1-score_(macro)

- Source

- https://en.wikipedia.org/wiki/F-score

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#F1-score_(micro)

- Source

- https://en.wikipedia.org/wiki/F-score

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Fowlkes–Mallows_index

- Source

- https://en.wikipedia.org/wiki/Fowlkes%E2%80%93Mallows_index

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Hamming_Loss

- Source

- https://en.wikipedia.org/wiki/Hamming_distance

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Hopkins_statistic

- Source

- https://en.wikipedia.org/wiki/Hopkins_statistic

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Jaccard_Score

- Source

- https://en.wikipedia.org/wiki/Jaccard_index

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Mathews_Correlation_Coefficient

- Source

- https://en.wikipedia.org/wiki/Phi_coefficient

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Mean_Absolute_Error

- Source

- https://en.wikipedia.org/wiki/Mean_absolute_error

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Mean_Squared_Error

- Source

- https://en.wikipedia.org/wiki/Mean_squared_error

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#METEOR

- Source

- https://en.wikipedia.org/wiki/METEOR

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#NIST

- Source

- https://en.wikipedia.org/wiki/NIST_(metric)

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Perplexity

- Source

- https://en.wikipedia.org/wiki/Perplexity

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Precision

- Source

- https://en.wikipedia.org/wiki/Precision_and_recall

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Purity

- Source

- https://en.wikipedia.org/wiki/Cluster_analysis#Evaluation_and_assessment

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#R_squared

- Source

- https://en.wikipedia.org/wiki/Coefficient_of_determination

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Rand_Index

- Source

- https://en.wikipedia.org/wiki/Rand_index

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Recall

- Source

- https://en.wikipedia.org/wiki/Precision_and_recall

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Root_Mean_Squared_Error

- Source

- https://en.wikipedia.org/wiki/Root-mean-square_deviation

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#ROUGE

- Source

- https://en.wikipedia.org/wiki/ROUGE_(metric)

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Silhouette_Score

- Source

- https://en.wikipedia.org/wiki/Silhouette_(clustering)

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#True_Negative_Rate

- Source

- https://en.wikipedia.org/wiki/Sensitivity_and_specificity

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#WER

- Source

- https://en.wikipedia.org/wiki/Word_error_rate

- belongs to

-

AI Model Assessment Metric

c

IRI: http://www.w3id.org/iSeeOnto/aimodelevaluation#Youden's_J_statistic

- Source

- https://en.wikipedia.org/wiki/Youden%27s_J_statistic

- belongs to

-

AI Model Assessment Metric

c